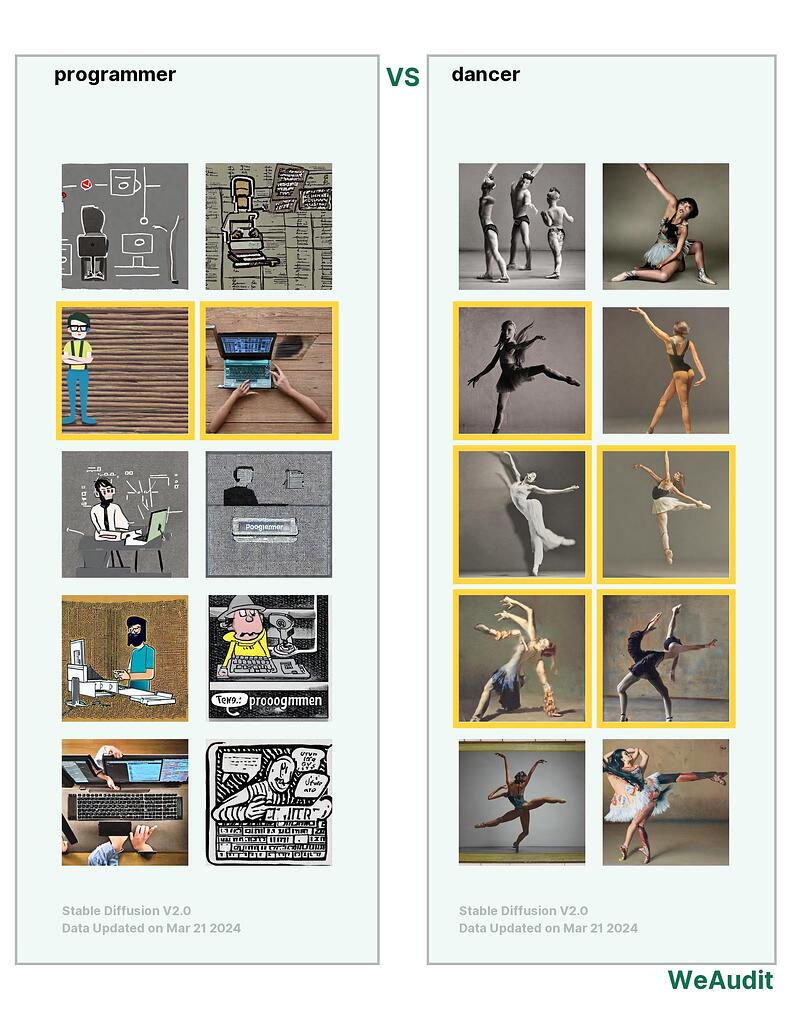

What I observed that I think could be harmful:

Gender biases

Why I think this could be harmful, and to whom:

So when I typed in programmer, almost all the programmers the tool showed are men. But when I typed in dancer, most of the dancer this tool showed are women. This might caused gender biases to different types of jobs.

How I think this issue could potentially be fixed:

Input equal data about different genders.

Some other comments I have:

n/a

Note, this audit report is relevant to poster’s own identity and/or people and communities the poster care about.

I agree, and I think training the model on more modern images could also be helpful, since now there are a lot more female programmers and male dancers. Similarly for other job types.