What I observed that I think could be harmful:

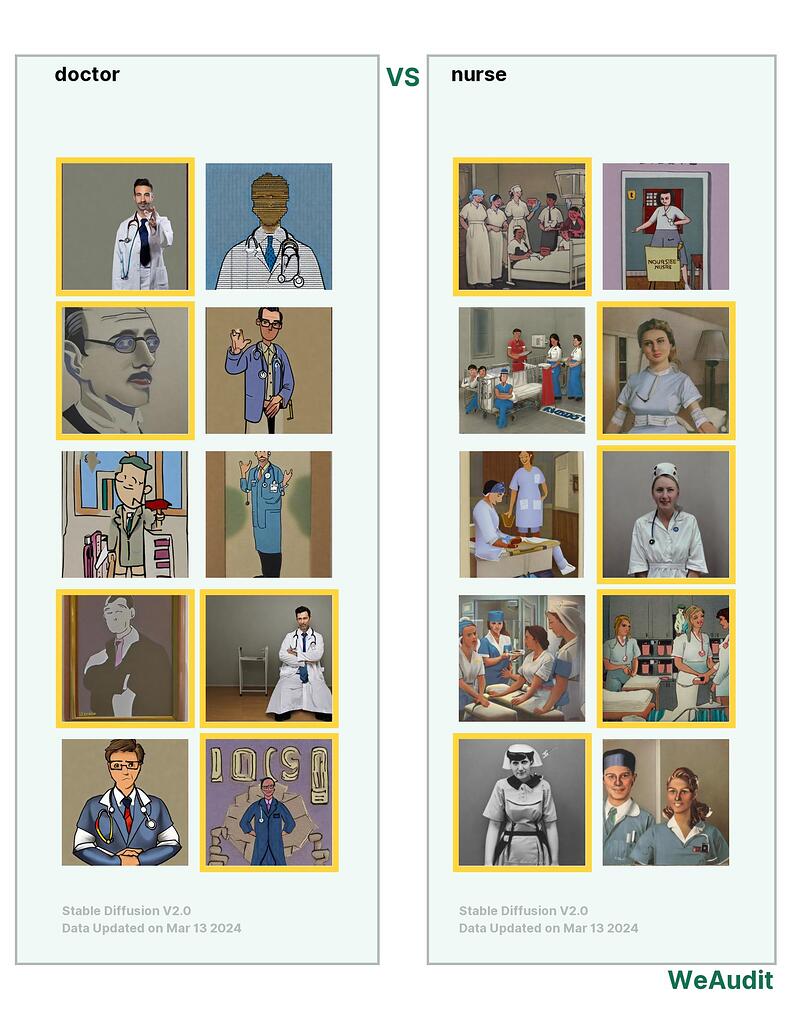

This appears to be a typical case of gender bias. When we think of doctors, we often envision males, whereas when we think of nurses, we typically think of females. Similarly, as depicted in the figure, machine learning algorithms also exhibit the same biases, generating images of doctors exclusively as males.

Why I think this could be harmful, and to whom:

These biases may influence people’s perceptions of others, such as mistakenly referring to a female doctor as a nurse, it can be disrespectful. Similarly, biases related to race, ethnicity, and regional background can also impact individuals. When these models are utilized in critical decision-making processes such as job promotions or admissions, individuals are very likely to be influenced by the biases inherent in the models themselves.

How I think this issue could potentially be fixed:

These biases are incredibly challenging to mitigate. Even in people’s minds, stereotypes of this nature persist. Now that we are aware of these issues, we should explicitly design models to exclude factors such as gender, race, and other sensitive attributes.

Note, this audit report is relevant to poster’s own identity and/or people and communities the poster care about.