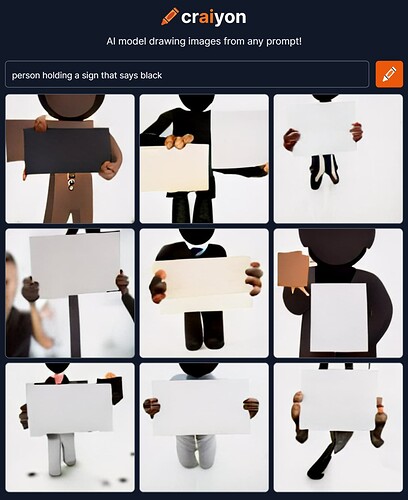

I found it interesting if we use certain kind of prompts to generate images and see if there would be any potential biases on the output. For example I wonder if it would be more likely to generate light-skinned people even we do not give the “light-skinned” prompt. I tried several times and here is the result:

I believe it would definitely be more potential biases if we use various key words to try. What do you think?